Attention, Perception, & Psychophysics, 1st of Feb 2025

Authors

Zhaoying Zhang

Zachary Hamblin-Frohman

Caleb De Odorico

Koralalage Don Raveen Amarasekera

Stefanie I. Becker

Abstract

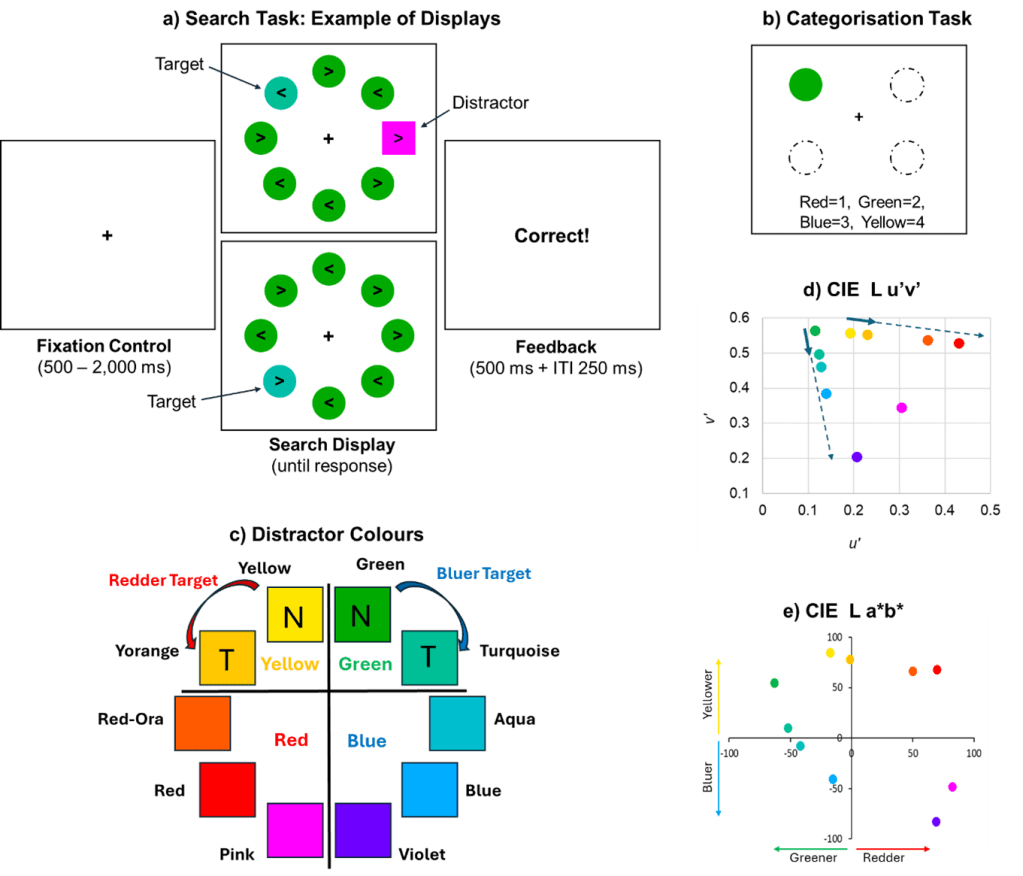

Our visual system can select visual information by tuning attention to task-relevant objects. The most prominent models of attention assume that we tune attention to the specific feature value of a sought-after object (e.g., orange). However, subsequent research has shown that attention can be tuned to the relative feature of the target, that the target has in relation to other items in the context (e.g., redder), in line with a Relational Account. However, so far it is unknown where the boundaries of relational search are: Is tuning to relative colours limited by colour category boundaries, so that attention is limited to the category of the target? Or can we predict the boundaries of relational tuning by a relational vector account, or by using tuning directions in CIE colour space? Here we tested these questions in a colour search task, by measuring eye movements to an irrelevant distractor. In a later categorisation task, participants also had to categorise all possible colours as either red, blue, green or yellow. The results showed that distractors that matched the relative colour of the target reliably attracted attention and the gaze, including when they were categorised as belonging to a different colour category as the target. Relational tuning is thus not limited by category boundaries. Different conceptualisations of CIE colour spaces also did not seem to be able to explain the results, so that the boundaries of relational tuning are currently best predicted by subjective colour comparisons (e.g., about which colours are redder).

Reference

In print. Coming soon!

Leave a comment